Dr. Johannes Hirth ist seit dem 31.10.2024 nicht mehr im Fachgebiet beschäftigt.

Raum 0443

Universität Kassel

Fachbereich Elektrotechnik/Informatik

Fachgebiet Wissensverarbeitung

Wilhelmshöher Allee 73

34121 Kassel

Tel.: +49 561 804-6350

ORCID: 0000-0001-9034-0321

Email: hirth@cs.uni-kassel.de (PGP-Key: 0x2D9F0D2E01928BC8)

Research Center for Information System Design (ITeG)

About Me

I am a German researcher that is interested in explainable artificial intelligence. With my research I focus on methods from symbolic methods to generate conceptual views. These are hierarchical structures that encode what abstract concepts are entailed in data and how they depend on each other. The resulting structures are great for structuring data, concept based navigation and rule inference. I contribute to both deeper theoretical insights and applications in the realm of topic models, latent representations of deep learning models, and knowledge representations in general.

In my spare time, I often find myself optimizing my Emacs workflow.

Selected Scientific Works (5/15) [full list]

- Hirth, J., Hanika, T.: The Geometric Structure of Topic Models. CoRR. abs/2403.03607, (2024).

- Hirth, J., Horn, V., Stumme, G., Hanika, T.: Ordinal Motifs in Lattices, (2023). [arXiv PDF]

- Schäfermeier, B., Hirth, J., Hanika, T.: Research Topic Flows in Co-Authorship Networks, https://doi.org/10.1007/s11192-022-04529-w, (2022). [arXiv PDF]

- Hanika, T., Hirth, J.: Quantifying the Conceptual Error in Dimensionality Reduction. In: Braun, T., Gehrke, M., Hanika, T., and Hernandez, N. (eds.) Graph-Based Representation and Reasoning – 26th International Conference on Conceptual Structures, ICCS 2021, Virtual Event, September 20-22, 2021, Proceedings. pp. 105–118. Springer (2021). [arXiv PDF]

- Hanika, T., Hirth, J.: On the lattice of conceptual measurements. Inf. Sci. 613, 453–468 (2022). [arXiv PDF]

Dissertation

Hirth, J: Conceptual Data Scaling in Machine Learning, Kassel, Universität Kassel, Fachbereich Elektrotechnik/Informatik (2024)

Talks

- Mai 2024: ‚Conceptual Structures of Topic Models‘, 1st Workshop on machine learning under weakly structured information, LMU Munich, Germany

- July 2023: ‚Scaling Dimension‘, ICFCA 2023, University of Kassel, Kassel, Germany

- Januar 2023: ‚Explaining Ordinal Data Structures via Ordinal Motifs‘, Concept Lattice Based Topological Data Analysis and Reasoning, Schloss Dagstuhl Computer Science Center, Wadern, Germany

- May 2022: ‚ Formale Begriffsanalyse & Mapping of Controversies‘, e_valuate!, Research Center for

Information System Design (ITeG), Kassel, Germany - September 2021: ‚Quantifying the Conceptual Error in Dimensionality Reduction‘, ICCS (2021), Bolzano, Italy

- September 2021: ‚Quantifying the Conceptual Error in Dimensionality Reduction‘, Applications of Formal Sciences: Explainable AI, Schloss Dagstuhl Computer Science Center, Wadern, Germany

- July 2021: ‚Exploring Scale-Measures of Data Sets‘, ICFCA (2021), Université de Strasbourg, Strasbourg, France

- March 2021: ‚Discovery of Conceptual Measurements/Entdecken Begrifflicher Messungen‘, Explainable Artificial Intelligence, Schloss Dagstuhl Computer Science Center, Wadern, Germany

- September 2020: ‚Navigating Conceptual Measurements‘, Dagstuhl Meeting on the Application of Formal Sciences — Knowledge Engineering, Schloss Dagstuhl Computer Science Center, Wadern, Germany

- June 2019: ‚Conexp-Clj – A Research Tool for FCA‘, ICFCA (2019), Frankfurt University of Applied Sciences, Frankfurt, Germany

Organization of Workshops, Tutorials and Other

- Local Organizer: ICFCA 2023, University of Kassel, Kassel, Germany

- Workshop: ‚Collaborative HAI-Learning through Conceptual Exploration‘, Organizers: Bernhard Ganter, Tom Hanika, Johannes Hirth and Sergei Obiedkov, HHAI 2024, Malmö University, Malmö, Sweden

- Workshop: ‚Ordinal Methods for Knowledge Representation and Capture (OrMeKR)‘, Organizers: Tom Hanika, Dominik Dürrschnabel, Johannes Hirth, K-CAP 2023, Pensacola, Florida, USA

- Workshop: ‚Preprocessing and Scaling of Contextual Data – PreSCoD‘, Organizers: Tom Hanika, Johannes Hirth, Ángel Mora Bonilla, ICFCA 2023, University of Kassel, Kassel, Germany

- Tutorial: ‚Conexp-Clj – A Functional Approach to Applying Formal Concept Analysis‘, Organizers: Jana Fischer, Tom Hanika, Johannes Hirth, ICFCA 2023, University of Kassel, Kassel, Germany

Reviewing

PC Member

- Web Science Conference, 2025

Reviewer

Subreviewer

- Concepts, 09-13 September 2024, Cádiz, Spain

- ISWC, 06-10 November 2023, Athen, Greece

- ISWC, 23-27 October 2022, Hangzhou, China

- ICCS, 12–15 September 2022, Münster, Germany

- ECML PKDD, 19–23 September 2022, Grenoble, France

- FCA4AI-2021, 21 August 2021, Montréal, Canada

- ECML PKDD, 13–17 September 2021

- 17th Russian Conference on Artificial Intelligence, 21–25 October, 2019, Ulyanovsk, Russia

- 11th ACM conference on Web Science, June 30–July 3, 2019, Boston, MA, USA

Teaching

- Artificial Intelligence (2024)

Teaching Assistance

- Functional Programming in Clojure: 2019 — 2024

- Conceptual Data Analysis (Labor): 2019 — 2023

- Conceptual Data Analysis: 2022

- Artificial Intelligence: 2022

- Knowledge Discovery: 2021

- Social Network Analysis (Labor): 2020

- Databases: 2020

Projects

Accompanying my theoretical research, there are two projects I mainly work for:

Conexp-Clj — A Research Tool for FCA

The research unit Knowledge & Data Engineering continues the development of the research tool conexp-clj, originally created by Dr. Daniel Borchmann. The continuous enhancement of the software package is supervised by Dr. Tom Hanika. Having such a tool at hand, the research group is able to test and analyze the theoretical research efforts in the realm of formal concept analysis and related fields. The most recent, pre-compiled, release candidate can be downloaded here.

A presentation of the tool can be found in Conexp-Clj – A Research Tool for FCA

BibSonomy

BibSonomy is a scholarly social bookmarking system where researchers manage their collections of publications and web pages. BibSonomy is an open source project, continously developed by researchers in Kassel, Würzburg, and Hanover. Functioning as a test bed for recommendation and ranking algorithms, as well as through the publicly available datasets, containing traces of user behavior on the Web, BibSonomy has been the subject of various scientific studies.

About BibSonomy Blog Open Source Repo Twitter

FAIRDIENSTE

Im Projekt faire digitale Dienste: „Ko-Valuation in der Gestaltung datenökonomischer Geschäftsmodelle (FAIRDIENSTE)“ wird ein interdisziplinärer Ansatz verfolgt, der sowohl soziologische als auch (wirtschafts-)informatische Aspekte beinhaltet. Es werden faire Geschäftsmodelle untersucht, die auf Kooperation und Wertevermittlung zielen.

Ein Ziel der Arbeit ist die Weiterentwicklung informatischer Methoden zur qualitativen Datenanalyse, welche die an den Kundenschnittstellen digitaler Dienste auftretende Konfliktlandschaft transparent machen und die für Verbraucher*innen eine kritische Beurteilung verschiedener Wertgesichtspunkte ermöglichen soll.

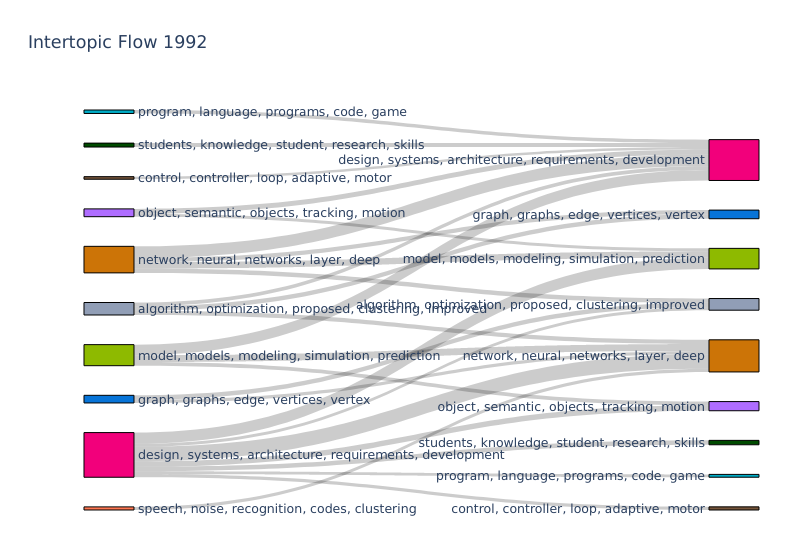

Interactive research topic flow web-application

Associated Papers:

- Schäfermeier, B., Hirth, J., & Hanika, T. (2022). Research topic flows in co-authorship networks. Scientometrics, 1-28.

Extracurricular Activities

MINT Week at University of Kassel

During the MINT Week 2023 at the University of Kassel we participated with a workshop on Which is better? Scale, organise and understand data properly.

100 Tage MINT

During the documenta 2022, there is a 100 days of STEM event hosted by the Schülerforschungszentrum Nordhessen, a research centre for students. We participated with a workshop on Which is better? Scale, organise and understand data properly.

Supervision of Student Internships

I supervise an annual two-week student internship at the University of Kassel on simulating traffic using cellular automata (2016) and analyzing the twitter social network (2018 — 2023).

Teaching Programming in Clojure

Teaching an annual Clojure Programming Course (2019 — 2024).